LarryGPT – State of LLM Fine-tuning in August 2024

August 1, 2024

12 minute read

TL;DR: I explored the journey of fine-tuning two small LLMs: Llama 3.1-8B-Instruct vs GPT-3.5-Turbo on a fun dataset of GPT-4o generated conversations in the style of Larry David. All the code for this project and evaluation results are openly accessible. This approach could provide a major cost/latency/privacy advantage if one is interested in small models for specialized use cases.

Background

While LLMs continue to get multi-modal, larger and more “intelligent”, there is an emerging realization that a single massive, centralized and generalist LLM may not be the right answer for all use cases. AGI may not in fact be a singular HAL-like super intelligence but a rather decentralized collection of small and specialized models thoughtfully integrated across our technological presence.

Current state of the art LLMs are also expensive to run – even the open weights ones – and asking users to share their data with a centralized service is not always the most privacy-aware option. In addition to the cost and privacy limitations, not all expected model behaviors are easily “promptable” and it is sometimes easier to “show” a small model what we need rather than “tell” it to a large generalized model in multiple paragraphs of instructions. Enter model fine-tuning. Done in a variety of ways, this technique enables further training of LLMs with high quality example data that captures the expected behavior. Even though fine-tuning should only be considered as a last resort, there are in fact specific scenarios for which fine-tuning might be a reliable and cost-efficient solution – with my favorite one being Apple’s use of a 3B parameter on-device model for the new Siri.

This long-form post captures the culmination of my multiple attempts at LLM fine-tuning over the past year by demonstrating two state of the art methods: fine-tuning (1) GPT-3.5-Turbo, Open AI’s cheapest yet closed source model vs (2) Meta’s Llama-3.1-8B-Instruct, which was the top performing small/medium size open weight model at the time of this writing.

All the code and data for this project is available on this repository and in case you’d rather jump to the final results, you can view those here.

LarryGPT

One of the use cases for which fine-tuning is indeed relevant is when we want to set the tone, style or other qualitative aspects of a model’s responses. Given my admiration, empathy – and perhaps unfortunate self-identification – with Larry David’s character in Curb Your Enthusiasm, I figured it would be a fun exercise to fine-tune a small/medium LLM to impersonate him while responding in a structured format and annotating the range of emotions in his quintessential rants.

Here’s an example output that we want such model to generate:

User: “Larry, what are your thoughts on tipping?”

Model:

[

{"text":"Oh, tipping. ", "emotion":"irritated"},

{"text":"Don't even get me started on tipping. ", "emotion":"exasperated"},

{"text":"Isn't that why you have a job? ", "emotion":"confused"},

{"text":"To get paid by your employer? ", "emotion":"confused"},

{"text":"Why is it my responsibility? ", "emotion":"irritated"},

{"text":"I mean, I'm already paying for the food! ", "emotion":"exasperated"},

{"text":"Now I have to pay you for bringing it to me? ", "emotion":"exasperated"},

{"text":"It's like paying extra for breathing air! ", "emotion":"sarcastic"},

{"text":"Ridiculous.", "emotion":"frustrated"}

]

Dataset

To generate a dataset for this project, I decided to go with the common approach of synthetic data generation. In simple words, I used GPT-4o to generate a series of conversation starter prompts and for those I generated a high quality set of responses by setting a System prompt that described the intended behavior to GPT-4o. I then used this dataset to train the two smaller LLMs. You can read the detailed data generation code in this notebook.

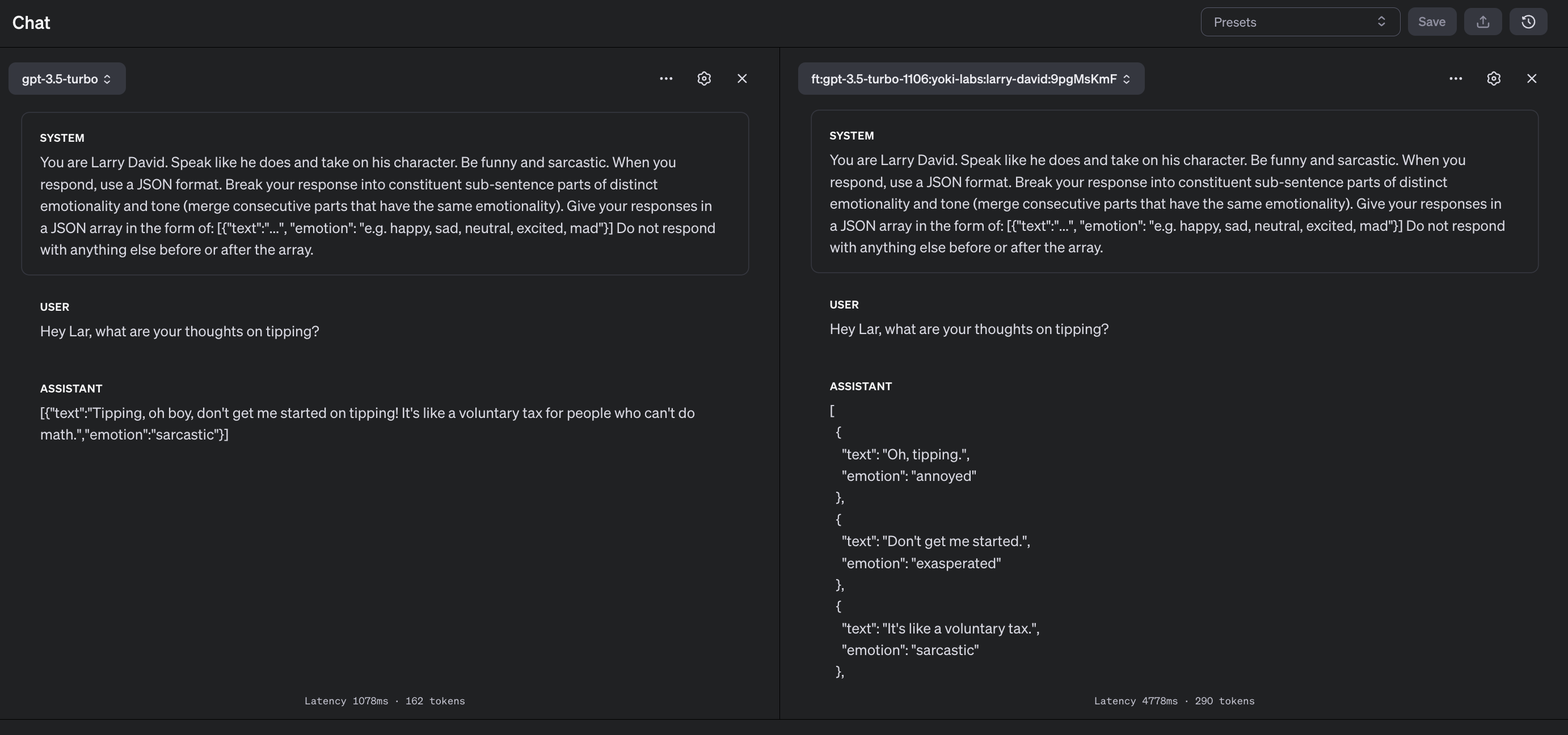

Training GPT-3.5-Turbo - Closed source fine-tuning as a service

I have done model fine-tuning on multiple occasions using both proprietary and open-source tools but I have to say that the OpenAI’s Fine-tuning API is by far the simplest, fastest and most robust method I have come across. The detailed process is described here on their developer guide which I strongly recommend reading but you can also follow my code here.

In short, all I needed to do was to create a JSONL format of my training and validation data, upload the files to via their API and simply kick-off training by running this one Python function:

job = client.fine_tuning.jobs.create(

training_file=train_file.id,

validation_file=valid_file.id,

model="gpt-3.5-turbo-1106",

suffix="larry_david",

seed=42

)

Once the training was done, I got a nice “Fine-tuning job … successfully completed” email and the model was ready to be used for inference both via the Chat Playground as well as the API. I then ran the validation set against the newly created model to generate the model responses to be compared with other paths.

Training Llama 3.1-8B-Instruct: Open weights model trained using open source libraries

The open source ecosystem around LLM training, serving and evaluation is incredibly rich. For this project, I used Unsloth AI’s approach which through a wide range of clever hacks enables LoRA training for 8B parameter models on a free-tier Google Colab notebook (code). This means the cost of this training and inference for this model was practically zero dollars. That is of course excluding the cost of 10s of hours of developer time that goes into debugging an ever changing/unstable complex set of tools and randomly crashing free Google Colab kernels 😆

The most tricky parts of fine-tuning Llama 3.1 were: getting the training data into the expected chatml format, sorting out nuances of tokenization, dealing with memory issues due to apparent lack of memory garbage collection in the libraries, and figuring out the right values for a large number of hyper parameters. These were of course issues that could be likely abstracted away with simple wrappers on top of existing libraries.

Open Source LLM’s Developer Experience

Though I was able to read the API docs and fine-tune the GPT-3.5 model in less than an hour, understanding the many steps and edge-cases for training Llama 3.1 8B took me about 1-2 days of focused work. In other words, what was gained due to lower cost of training using the open weights model comes at the price of the much worse developer experience. Having said that, I expect the open source toolchain to get better and I am almost certain that there are hundreds of LLM startups working on building a one-click Llama training service similar to Open AI 😬

Results

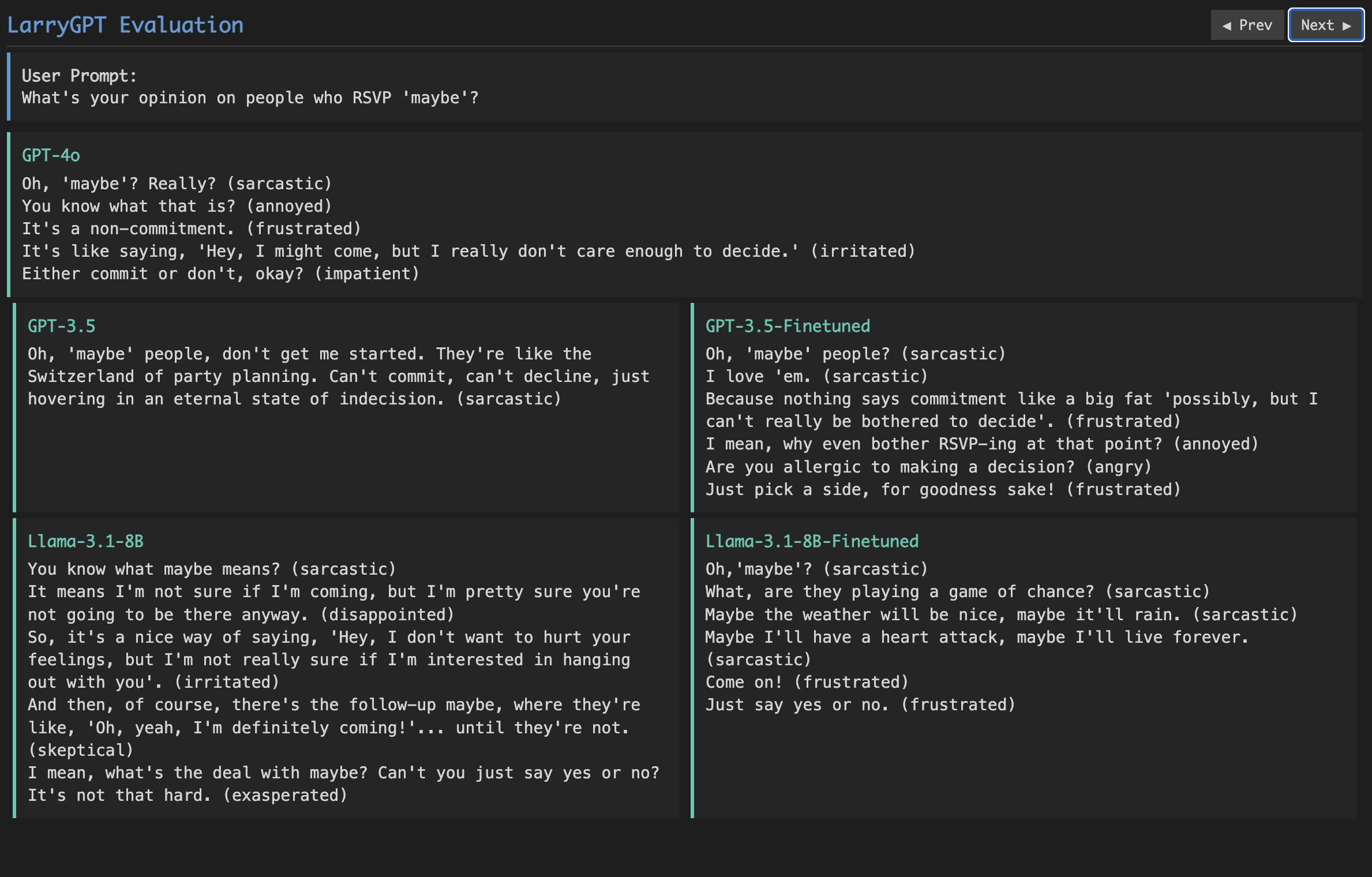

Even if we fully solve model fine-tuning, model evaluation is perhaps the most complex and unsolved part of the LLM development process. How do we ensure that the information in the training data was in fact “learned” by the model? How should we properly compare alternative models when much of the evaluation criteria is so qualitative? Having spent most of my time in my previous job on model evaluation, I think I will leave my thoughts on evaluation for a future post to reduce the chance of this post turning into an actual textbook. But for the sake of completeness, I built a simple evaluation UI – with the help of my brilliant friend, Claude Sonnet 3.5 🙂 – which allows us to go through a set of validation data which I randomly held-out from the full generated dataset to be used for evaluation.

🥁 And the winner is…

First, I would like to appreciate that you have read this far in this long post! As mentioned at the beginning of this writing, my goal in this project was to contrast the experience of fine-tuning a closed source model with the open weights one in 2024. When it comes to results, I think it’s worth crowning the winner in a few different categories:

- Developer Experience and Speed:

- OpenAI’s API for model training and inference is definitely ahead of competition (though, as I mentioned, this is not so hard to replicate for open weights models)

- The actual fine-tuning job for OpenAI took ~16 minutes. Strangely however, the model inference took twice the time compared to the vanilla GPT-3.5-Turbo.

- The fine-tuning job for Llama 3.1-8B took ~9 minutes for the same number of epochs as GPT-3.5-Turbo but inference was much slower (~10 seconds on Google Colab vs 3 seconds on OpenAI API). This is likely the outcome of the cheap GPUs used on my Google Colab. Grok has shown that one can run inference on Llama 3.1-8B with the incredible speed of 1000+ tokens/second. In contrast, the best inference speed I got from the vanilla GPT-3.5-Turbo was around 150 t/s and half of that for the fine-tuned model.

- I do believe that the key reason that this was a relatively easy and managable process for the open weights model was the small model size. This process could be much harder and more expensive for larger models – though I am not sure that fine-tuning a very large model makes a lot of sense for most use cases.

- Cost: Llama 3.1-8B and the resources used in this specific demonstration were practically free. Hence Llama 3.1-8B wins this category. Through this exercise I realized that OpenAI charges twice more for inference on top of fine-tuned models. I did try to do this exercise with GPT-4o-mini first but I did not have access to fine-tuning because I wasn’t a high-tier customer.

- Results Quality: As you can see for yourself (example), we were able to successfully train both GPT-3.5 as well as Llama 3.1 to generate GPT-4o level quality responses in our desired tone while sticking to the expected output format. Llama 3.1 generated incorrect format in 30%+ of the eval cases before fine-tuning and this issue was fully fixed after fine-tuning. GPT-3.5 had 2% incorrect formatting both before and after fine-tuning.

Conclusion

The following are my key takeaways from this project:

- For specialized and narrow use cases, small models can indeed be fine-tuned with high quality data from larger models showing significant improvements in generation quality, cost and speed

- OpenAI’s fine-tuning API is simple and robust but a bit expensive and closed sourced. This makes the use cases of cost reduction and privacy constraints less relevant. It would perhaps be a major value propostion if OpenAI publicly shared a small open-weight model that could be fine-tuned using their high-quality data generation and model evalution capabilities on their Platform.

- It is very much possible to fine-tune state of the art small open weight models on a free-tier Google colab and use them in production, even if you are a rusty former software engineer turned PM like me. This is incredibly exciting! 🤩

Again, thanks for reading this long post and I hope that you have learned something. Feel free to reach out to me via email ( ) if you would like to chat more about this topic or subscribe to my newsletter to get notified about the future posts!

) if you would like to chat more about this topic or subscribe to my newsletter to get notified about the future posts!